In ’59, Isaac Asimov, the scientist and sci-fi writer, got asked to report on “How do people get new ideas?” He reckoned that creativity comes from having deep and diverse knowledge on the subject and linking ideas from different areas. The best new ideas may seem crazy and come from people with odd personalities and a solid foundation of knowledge. Moreover, discussing ideas casually can lead to valuable new insights. Although there are many other essays and books on creativity, for us we need to think: “How do data scientists get new ideas?”

Disclaimer: Opinions are my own and not the views of my employer. I frequently update my articles. This is version 1.0 of this article.

This article talks about how ideas are generated in the modern industrial setting. While in academia, scholars produce many theoretical ideas and projects, the industry has a unique requirement that ideas must be practical and beneficial for businesses. Therefore, our focus is on the industry, although the information may be applicable to other settings as well. Good ideas can come from any level of an organization, but it’s important to have a process to allow good ideas to surface and filter out bad ones. There are some useful resources for generating creative ideas, but our focus will be on concepts related to data science.

I found two effective ways to create new data science projects: 1) Gap Analysis and 2) Developing New Technological Capabilities. I’ll explain each method, including its advantages and disadvantages, and provide some examples for both below.

Gap Analysis

When using gap analysis, we often inquire about known issues in the current process of creating value for customers. We ask if any part of the process is:

- expensive and requires significant resources,

- produces inaccurate results that don’t meet the user’s expectations,

- is difficult to use or understand, or

- is slow and unable to scale to provide results quickly?

Teams often have a substantial backlog of ideas that can be overlooked during daily operations. As a result, it’s crucial to maintain a repository of long-term and permissible projects that can be revisited when resources become available.

Projects developed using gap analysis are generally easier to rationalize because the underlying pain points are well-established throughout the team. Additionally, the potential benefits of these projects are often more straightforward to quantify.

The difficulty lies in fully comprehending the solution to the problem at hand. It may involve researching similar use cases in both industry and academic literature to identify an appropriate solution. Even then, choosing the most optimal solution from a range of possibilities can be a challenging task.

Nonetheless, the primary obstacle with these types of projects is that they typically offer only marginal gains. A company that solely concentrates on incrementally enhancing its existing products and solutions will eventually be surpassed by a more inventive competitor capable of thinking outside the box and creating revolutionary projects.

Here are a few examples of projects that I have initiated using gap analysis:

- Differences in the performance of a system across different markets can indicate an opportunity to implement multilingual models. Segmenting the system by relevant metrics such as region, country, gender, age, and other demographics, as well as subsystem types such as mobile or web-apps, and comparing their performances can reveal gaps in the current system.

- Comparing a system’s key performance indicators (KPIs) with industry benchmarks or other companies’ available information can identify areas for improvement. For instance, if the average industry click-through rate (CTR) is 0.2%, a system-wide CTR rate of 0.1% indicates significant room for improvement.

- Examining common errors, significant bugs, engineering dissatisfaction, and difficulties in adding new features to a subsystem can also highlight gaps that need to be addressed. While this is mainly relevant to architectural problems, with better models, deployment can also become more manageable. Redesigning a demand forecasting model to use streaming models helped us overcome repetitive bugs and streamline the entire process.

- Analyzing and categorizing customer complaints is another valuable resource for identifying gaps in the system. Although it may be easy to dismiss customer experience as merely subjective, their feedback can provide insights into their expectations based on the performance of competitor systems.

Therefore, it’s relatively simple to implement gap analysis procedures for teams and companies. Here are some common steps:

- Define valuable and well-behaved key performance indicators (KPIs) for each system and track them.

- Track the KPIs for each subsystem and use dashboards to compare them across various segments.

- Conduct frequent reviews of internal and external customer complaints and aggregate their reports.

- Track industry benchmarks or competitor performance. We can even conduct experiments on competitors’ products to measure their performance.

- Maintain a repository of backlogged ideas (larger ones) and review them on a predefined frequency.

Developing New Technological Capabilities

Another major way to discover new project ideas is to keep an eye on recent external developments in the field or adjacent fields of science. Ask yourself if there are any:

- new technologies developed in academia that could be adapted to your problem?

- new use cases that employ your common techniques and capabilities? Can you incorporate that use case as a new feature in your product?

- developments in an adjacent scientific area that is similar to your problem?

Venturing into novel ideas often yields 10X returns that can significantly elevate business outcomes and enrich customer interactions, providing a substantial competitive edge in the market. Yet, such projects come with a high degree of risk due to the dearth of expertise and substantial resource requirements. Therefore, it is prudent to maintain a diverse portfolio comprising both high-risk, high-reward initiatives and less-risky ventures based on a comprehensive gap analysis.

Through my own experience, I’ve discovered a few exciting technological capabilities projects:

- by utilizing publicly available large-scale language models, we were able to significantly enhance the performance of our NLP model and generate an additional XX million dollars in company revenue.

- employing an open-source library that is commonly applied in similar Kaggle competitions enabled us to substantially improve a key performance indicator for our system.

To discover project ideas from external sources, there are several effective approaches:

- One is to have regular learning ceremonies where team members can openly discuss and explore external technologies and breakthroughs.

- Another is to allocate a small percentage of your team’s resources and time to explore new ideas and technologies, even if they don’t immediately appear to be applicable.

- Encouraging team members to review literature and explore data science competitions can also yield valuable insights into the latest methods and technologies being applied to your specific problem.

- Furthermore, it’s essential to remain open and receptive to advancements in adjacent areas and consider applying new techniques to your own problem. For instance, many deep-learning architectures have been transferred from computer vision to NLP and vice versa.

- Lastly, continuously exploring new ideas from academia, conferences, and industry exhibitions can provide a wealth of inspiration and fresh perspectives.

Diminishing Return Law: first it works, then it doesn’t!

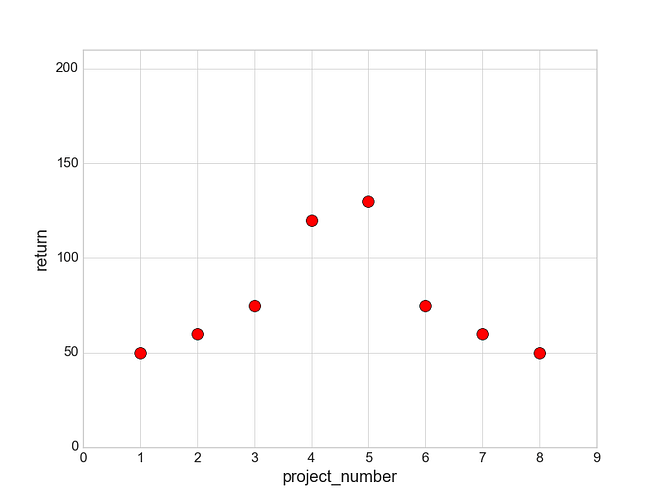

The law of diminishing returns, proposed by French economist Turgot, is a frequently used method by managers to determine the worth of working in a particular area. This law states that the marginal output will eventually decrease as more of a single factor is used in production while holding other factors constant. This happens because the fixed inputs are being distributed over a greater number of units of the variable input. In simpler terms, the benefits of deploying more projects in a single area will increase to a point and then start to decline.

Based on my experience, the return on projects doesn’t always follow the law of diminishing returns. Typically, the first and second machine learning models implemented in each area tend to be highly successful. Unsurprisingly, any model outperforms static rules or simple heuristics. However, after the initial successes, the returns on subsequent projects tend to be unpredictable. For instance, a previously failed new technique might become mature enough to yield substantial benefits after two years of development. Alternatively, a series of successes and failures of varying degrees might occur in a particular application area. Due to this unpredictability, I personally prefer to use Traser Bullets instead of relying on the law of diminishing returns.

Tracer Bullets

I learned about the concept of tracer bullets from the book “The Pragmatic Programmer,” which I found to be a valuable tool. The concept is similar to a tracer bullet that illuminates the path to the target. To analyze critical components of a project, we can use simpler models or code. Tracer bullets, however, are not the same as prototypes. Prototypes, also known as proof of concept (PoC) code, are smaller versions of the final product. In contrast, tracer bullets are used to inspect specific critical parts of a problem.

When it comes to designing tracer bullet sub-projects, a useful approach is to use the mental model of “peeling the risk onion.” This method involves identifying the critical technical and non-technical risks associated with the project and then designing sub-projects to estimate the resources and difficulty required for each aspect. To clarify this concept, let’s consider an example.

Some time ago, my team was given the task of implementing a real-time NLP model in production to meet a customer’s demands. During the project, we encountered several crucial questions, including:

- Is it possible to meet the 50 ms inference requirement for the model?

- Can the model be scaled to handle the large volume of data that we are processing?

- Can we transmit messages to the downstream Kafka server from our Python-based prediction module?

- Can we achieve the required prediction accuracy?

After analyzing the critical risks involved in the project, we determined that the second bullet point had the highest level of risk, followed by the third point. However, we were able to solve the third issue, albeit with some additional time spent fixing the serializers for Kafka messages. Additionally, we found that a basic model we developed was able to meet the accuracy requirements. As a result, we prioritized testing and improving our ability to scale models using a combination of caching and other methods. We also tested a similar large language model before ultimately focusing on improving our initial model.

Filtering Bad Ideas

Filtering out bad ideas is crucial because exploring every possible idea can waste significant resources. Unfortunately, bad ideas can still gain traction in both small and large companies. The author recalls significant initiatives in both types of companies that were not well-regarded by the technical teams.

To filter out bad ideas quickly, it’s important to consider the following:

- Theoretically wrong: If something is theoretically unsound, it’s likely to be a bad idea. Creating a toy example and discussing it with the team can help illustrate why it’s not a good option.

- Dominance strategy: If a technique, model, or architecture already exists that provides a better solution, using other models would be wasteful. Although sometimes less dominant strategies are acceptable for strategic reasons, and fast delivery, meeting business targets should always be the priority.

- Pet projects: It can be challenging to remove inefficient projects or legacy code that doesn’t deliver. Delivering one successful project can highlight the limitations of the old stack and make it easier to replace it with a more effective solution, like using advanced Python libraries instead of outdated Scala code.

Eliminating bad ideas as early as possible is critical to avoiding the wastage of significant resources. However, it is also common for high-ranking managers to believe that their intuition can be applied to areas that are arguably irrelevant. To avoid this, it is crucial to remain open to new ideas and rely on data and hard evidence to accept or reject them.

How to prioritize data science projects?

Prioritizing data science projects is not very different from other software projects. To prioritize data science projects, we estimate the following dimensions and order them:

- Business impact and intangible benefits,

- Resource intensity,

- Risks and expected value of objectives.

Be open to exploring ambitious or difficult ideas. Upon further analysis, ways to simplify project delivery may be found. The portfolio of projects can be adjusted based on the business appetite for risk, including ambitious, risky, and certain but less beneficial projects.

Summary

We talked about two ways to create new ideas in data science: gap analysis and developing new technological capabilities. Gap analysis looks inside the process to find areas that are slow, inaccurate, or expensive and improve them. Breaking down data into segments and comparing them to industry benchmarks can help identify gaps in the system. The second method involves following external breakthroughs and capabilities, which can provide 10X improvements.

Using sub-projects can help analyze the riskier parts of a project. A portfolio of both high-risk, high-reward projects and more certain ones can create a healthy balance for a data science team. It’s important to filter out bad ideas early on using hard data and facts to allow the team to focus their resources on winning ideas.