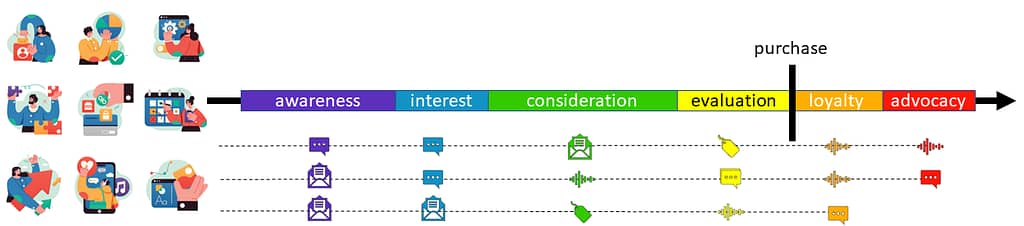

When I asked ChatGPT, “Could you please provide a conceptual model of marketing funnel optimization from the data science perspective?” it provided an answer along these lines:

- Data science can analyze customer behavior and improve each stage.

- Optimizing the marketing funnel aims to increase conversion rates for more revenue.

- At the awareness stage, data analysis identifies effective channels for reaching potential customers. Analyzing website traffic, social media engagement, and advertising performance helps determine the most effective channels.

- In the interest stage, data analysis personalizes content and messaging for potential customers. By analyzing customer behavior and preferences, businesses tailor marketing messages to increase the likelihood of conversion.

- In the decision stage, data analysis identifies barriers to purchase. Customer feedback and website analytics help businesses address why customers may be abandoning shopping carts or not completing purchases.

- In the action stage, data analysis measures marketing campaign effectiveness. Analyzing conversion rates, revenue, and customer lifetime value helps determine the most profitable campaigns for businesses to adjust their strategies accordingly.

- Overall, the marketing funnel optimization conceptual model from a data science perspective involves analyzing data at each stage of the funnel to identify areas for improvement and optimize marketing campaigns for maximum conversion rates and revenue.

While the above method is the current dominant mode of thinking on how a data science team should be utilized in optimizing marketing funnels, it suffers from critical deficits:

- This approach involves a lot of manual interventions. At each stage, an analyst investigates the historical data or sets experiments to gather insights into patterns to segment customers and optimize their interactions.

- The communications and interactions with customers are not fully personalized. Instead of treating each customer as a unique individual with a unique set of needs, we bulk them into segments and try to find standard methods to interact with each segment.

- Each stage of the funnel is usually optimized independently and not in relationship with other stages. For example, we might celebrate the traffic increase at the top of the funnel, but there is not much analysis of the new traffic quality and the conversions at the downstream stages.

- Moreover, running experiments in this paradigm is something humans do: a data scientist forms a hypothesis, collaborates with the product and engineering teams to design an experiment, runs the experiment, collects the data, and takes action based on the results. This method of experimentation is slow, painful, prone to errors, vulnerable to human judgment, and highly inefficient.

- But most importantly doesn’t utilize the full scale and capability of the available data and machine learning power. Developing a good decision at each stage independently results in local optimizations that might differ substantially from the overall system’s global optima.

Therefore, we suggest another approach with hands-off involvement from humans and capacitating models to optimize the customer journey within the stages and the entire process itself.

Disclaimer: Opinions are my own and not the views of my employer. I frequently update my articles. This is version 1.0 of this article.

An Alternative Approach

The alternative approach is considering a single customer journey across the entire marketing funnel and optimizing the messaging and offers. In this approach, instead of trying to find aggregated chunks (segments) of customers that behave similarly, we utilize our aggregated knowledge of interactions with other customers to optimize the experience for this particular customer.

We can use a mathematical representation to formalize this concept. At each time step (t), which can be as granular as necessary, we want to find the best offer (o), with the message (m), through channel (c) to maximize the probability of a particular user, denoted by (u), moving from the i-th stage to the j-th (p_ij) in their journey. We can use a vector of customer attributes (x) to enrich the models. Demographic information is an example of such attributes.

There are different methods to model a solution for this problem. Since we don’t know the current stage that a customer is in, we can use Hidden Markov Model (HMM) framework to estimate the transition probabilities and impact of different offers and messages in transition from one stage to another.

Another method is to assume the company has an excellent method to predict, or know, the current stage of a customer in their journey. Usually, companies use a combination of behavioral events to flag customers at a specific stage. For example, visiting the website is considered the start of the interest stage. In this case, assuming an MDP (Markov Decision Process) is a natural choice: at each time period (t) the customer is in a state (s), we estimate the probability of transitions into the next state (s’).

Using Reinforcement Learning (RL) is the most common method to find a solution to this problem. The agent, company or marketer, is taking an action that includes the triplet of offer, channel, and message (o, c, m) and receives a reward (customer clicks on the website or other engagements until the purchase). In theory, RL methods such as policy gradient methods or Q-learning provide methods to find solutions to this problem.

A compromise

Each optimization problem has different structures and conditions that could be utilized to make it easier to solve and find more efficient algorithms. In this case, we can reduce the complexity with the following observations:

- The funnel structure means the number of people at each stage is substantially smaller. It’s common to have conversion rates around 1% to 10% on top of the funnel, which means a 10X to 100X reduction in the number of people. We can utilize this fact to:

- Only model the lower stages of the funnel,

- Capture the available information on the people at the top of the funnel, and summarize it as a state for the downstream.

- In practice, substantial correlations exist between engagement metrics at different stages. For example, efforts that increase the click-through rate usually tend to cause a similar range raise in purchases. However, given the side effects we mentioned, this should not have been relied upon often.

- Utilizing human judgment to reduce the space of our strategies substantially. Although not all human judgments are applicable and based on factual data, many contextual information and communication norms can be incorporated into the solution space. An example is removing any offensive or impolite message or selecting a consistent message through the funnel.

Attribution or Credit Assignment

A key question in managing a marketing funnel is to find the contribution of each channel in delivering a conversion. In addition to using these models to optimize the marketing budget between different channels, these models are commonly used in Cost to Action (CPA) pricing to pay publishers and pricing the inventory. A few heuristic-based methods for attribution modeling are:

- First-Touch Attribution: Credits the first touchpoint for driving customer interest and initiating the customer journey.

- Last-Touch Attribution: Credits the last touchpoint for influencing the conversion decision as it was the last interaction before conversion.

- Linear Attribution: Distributes credit evenly across all touchpoints, assuming equal influence on conversion.

- Time Decay Attribution: Assigns higher credit to touchpoints closer to conversion and lower credit to those further in the past.

- U-Shaped or Position-Based Attribution: Credits first and last touchpoints more (e.g., 40% each) with remaining credit (e.g., 20%) distributed evenly among touchpoints in between.

While simple methods such as linear attribution performed well in practice, they don’t capture customer heterogeneity. Therefore, not only are they not suitable for making suggestions for the next communication channel for a specific customer, but they also could be misleading for aggregate-level budget optimization across channels.

Most advanced statistical learning models also do not take contextual information about the individual customers into account to make attribution modeling simpler. The underlying assumption is that the distribution of heterogeneity in the future will be similar to the current population, which could be devastatingly wrong. Moreover, such a model is not suitable for making decisions at individual levels.

Furthermore, multi-device attribution and measurements for different members of a household or shared devices are complicated.

Measurements and A/B testing

To make matters worse, correctly measuring the impact of using different channels and running A/B tests is also challenging. A few common problems are:

- Limiting exposure: for the control and treatment group is problematic when customers might get messages across many channels. Traditional channels, such as TV, billboard, print, etc., are particularly hard to be mixed and examined in connection with digital channels.

- Time lags attribution window: the exposure and conversions make measurements questionable.

- Data accuracy and quality: Ensuring data accuracy and quality from various sources, leading to potential discrepancies and incorrect insights.

- Privacy and data regulations: Compliance with privacy and data regulations affects the type and amount of data that can be collected, impacting measurement accuracy.

- Lack of standardized measurement frameworks: The absence of standardized measurement frameworks makes it challenging to benchmark performance and compare results.

- Heterogeneity of Channels: this could lead to difficulty defining the same metrics for different channels. For example, the click-through rate is undefined for cable TVs or print.

Practical Considerations

Radical environmental change can make any model statically trained on historical data irrelevant. Statisticians and data scientists use the term non-stationary to refer to these types of environments. To tackle this issue, we have a few different instrumentations to use in this situation:

- In addition to changes to the macroeconomic factors, individual people’s states might change and cause them to behave differently. We need to capture changes at multiple levels correctly.

- In training models, we need to increase the weight of the most recent data to a level that doesn’t make a model unstable.

- We can frequently retrain production models to ensure they are up-to-date with recent data. Training models in production require monitoring and performance tests to ensure their reliability.

- The preference should be for using simpler algorithms. In practice, simple models outperformed more complex models regarding performance and maintainability.

Summary

Optimizing a marketing funnel can improve a business’s success, but it’s very challenging. Data scientists lack a conceptual framework to guide their efforts and usually focus on local optimization.

The current approach to optimizing a market funnel suffers from deficits such as manual interventions, lack of personalized communications, and local optimization in each stage. A new approach is suggested to address these issues, utilizing models to optimize the customer journey and the entire process without human involvement.

The alternative approach is to consider a single customer journey and optimize the messaging and offer using aggregated data. A mathematical representation can be done through Hidden Markov Model (HMM), or Markov Decision Process (MDP) frameworks, and Reinforcement Learning (RL) is the most common method used to find a solution. The agent, a company or marketer, takes an action and receives a reward, and RL methods provide ways to find a near-optimal solution.

To simplify the problem, we can leverage the funnel structure. There are correlations between engagement metrics at different stages. We can incorporate human judgment to reduce the strategy space and only model downstream stages. Various heuristic-based methods for attribution modeling are available, such as First-Touch, Last-Touch, Linear, Time Decay, and U-Shaped. Simple methods like Linear may perform well. Multi-device attribution and measurements for shared devices are complicated.

Running A/B tests can be challenging due to problems such as limiting exposure, time lags in the attribution window, data accuracy and quality, privacy and data regulations, lack of standardized measurement frameworks, and heterogeneity of channels. These challenges make it difficult to ensure data accuracy and quality, benchmark performance, and compare results.

Radical environmental change can render statistical models irrelevant. To address this, statisticians and data scientists must recognize non-stationary environments and use different techniques including capturing changes at multiple levels, weighting recent data appropriately, frequently retraining models, and using simpler algorithms that are easier to maintain.